webside_parser

1.环境依赖

python 2.7

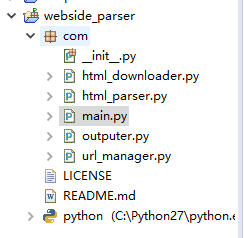

2.目录结构- html_downloader 用于html下载

- html_parser 用于解析html返回对应数据,这里用的pyQuery这个库,类jQuery语法,方便实用

- outputer 结果输出

- url_manager url管理

- main程序入口

# -*- conding:utf-8 -*-

from com import url_manager,html_downloader,html_parser,outputer

class HtmlScheduler(object):

def __init__(self):

self.urls = url_manager.UrlManager()

self.downloader = html_downloader.HtmlDownloader()

self.parser = html_parser.HtmlParser()

self.outputer = outputer.Outputer()

pass

def parse(self):

new_url = self.urls.get_new_url()

if new_url!=None:

html = self.downloader.download(new_url)

new_urls,new_content = self.parser.parse(html)

self.urls.add_new_urls(new_urls);

if new_content!=None:

self.outputer.collect_data(new_content)

pass

def start_parse(self,count):

self.urls.add_new_url(root_url)

try:

while self.urls.has_new_url() and count>0:

self.parse()

count = count - 1;

except:

print 'error'

finally:

self.outputer.output()

print 'success'

print 'new urls:%s' % self.urls.new_urls

print 'old urls:%s' % self.urls.old_urls

pass

if __name__=='__main__':

root_url = 'https://www.imooc.com/course/list'

html_scheduler = HtmlScheduler()

html_scheduler.start_parse(20)

from pyquery import PyQuery as pq

class HtmlParser(object):

def __init__(self):

self.links_a = []

self.links_image = []

pass

def parse(self,content):

doc = pq(content)

for a in doc('.text-page-tag').items():

href = a.attr('href')

if href!=None:

self.links_a.append(href)

for image in doc('.course-banner').items():

src = image.attr('data-original')

alt = image.closest('.course-card').find('.course-card-content h3').text()

if src!=None:

self.links_image.append((alt,src))

return self.links_a,self.links_image

pass

import urllib2

UA = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.117 Safari/537.36'

class HtmlDownloader(object):

def __init__(self):

pass

def download(self,url):

request = urllib2.Request(url)

request.add_header('user-agent', UA)

response = urllib2.urlopen(request)

content = response.read();

return content

pass

import urllib

class Outputer(object):

def __init__(self):

self.data = []

pass

def collect_data(self,data):

if data!=None:

for item in data:

self.data.append(item)

pass

def output(self):

x = 0

for item in self.data:

if item[1]!=None:

print 'output image:%s' % item[0]

try:

urllib.urlretrieve('http:'+item[1], 'D:\\parser_pics\%s.jpg' % item[0])

except:

print 'output image %s error' % item[1]

x = x+1

pass

class UrlManager(object):

def __init__(self):

self.new_urls = set()

self.old_urls = set()

pass

def has_new_url(self):

return len(self.new_urls)!=0

def add_new_url(self,url):

if url!=None and '/course/list' in url and not url in self.old_urls:

if 'https://' in url:

self.new_urls.add(url)

else:

self.new_urls.add('https://www.imooc.com'+url)

pass

def add_new_urls(self,urls):

for url in urls:

if url!=None:

self.add_new_url(url)

pass

def get_new_url(self):

url = self.new_urls.pop()

self.old_urls.add(url)

return url;

这个爬虫和上一篇文章的爬虫输出结果一样,只不过是python实现的,今天就写到这吧,马上要上班开工了~~

点击查看更多内容

为 TA 点赞

评论

共同学习,写下你的评论

评论加载中...

作者其他优质文章

正在加载中

感谢您的支持,我会继续努力的~

扫码打赏,你说多少就多少

赞赏金额会直接到老师账户

支付方式

打开微信扫一扫,即可进行扫码打赏哦