2 回答

TA贡献1836条经验 获得超4个赞

以下是您最后一个问题的后续答案。

import seaborn as sns

import pandas as pd

titanic = sns.load_dataset('titanic')

titanic = titanic.copy()

titanic = titanic.dropna()

titanic['age'].plot.hist(

bins = 50,

title = "Histogram of the age variable"

)

from scipy.stats import zscore

titanic["age_zscore"] = zscore(titanic["age"])

titanic["is_outlier"] = titanic["age_zscore"].apply(

lambda x: x <= -2.5 or x >= 2.5

)

titanic[titanic["is_outlier"]]

ageAndFare = titanic[["age", "fare"]]

ageAndFare.plot.scatter(x = "age", y = "fare")

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler()

ageAndFare = scaler.fit_transform(ageAndFare)

ageAndFare = pd.DataFrame(ageAndFare, columns = ["age", "fare"])

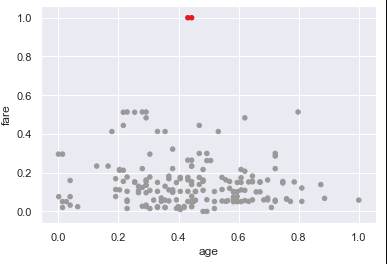

ageAndFare.plot.scatter(x = "age", y = "fare")

from sklearn.cluster import DBSCAN

outlier_detection = DBSCAN(

eps = 0.5,

metric="euclidean",

min_samples = 3,

n_jobs = -1)

clusters = outlier_detection.fit_predict(ageAndFare)

clusters

from matplotlib import cm

cmap = cm.get_cmap('Accent')

ageAndFare.plot.scatter(

x = "age",

y = "fare",

c = clusters,

cmap = cmap,

colorbar = False

)

有关所有详细信息,请参阅此链接。

https://www.mikulskibartosz.name/outlier-detection-with-scikit-learn/

在今天之前,我从未听说过“局部异常值因素”。当我用谷歌搜索它时,我得到了一些信息,似乎表明它是DBSCAN的衍生物。最后,我认为我的第一个答案实际上是检测异常值的最佳方法。DBSCAN正在聚类算法,碰巧找到异常值,这些异常值实际上被认为是“噪声”。我不认为DBSCAN的主要目的不是异常检测,而是集群。总之,正确选择超参数需要一些技巧。此外,DBSCAN在非常大的数据集上可能很慢,因为它隐式地需要计算每个采样点的经验密度,从而导致二次最坏情况的时间复杂度,这在大型数据集上非常慢。

TA贡献1802条经验 获得超4个赞

您:我想应用 k 均值来消除任何异常。

实际上,KMeas 将检测异常并将其包含在最近的聚类中。损失函数是从每个点到其分配的聚类质心的最小距离平方和。如果要剔除异常值,请考虑使用 z 得分方法。

import numpy as np

import pandas as pd

# import your data

df = pd.read_csv('C:\\Users\\your_file.csv)

# get only numerics

numerics = ['int16', 'int32', 'int64', 'float16', 'float32', 'float64']

newdf = df.select_dtypes(include=numerics)

df = newdf

# count rows in DF before kicking out records with z-score over 3

df.shape

# handle NANs

df = df.fillna(0)

from scipy import stats

df = df[(np.abs(stats.zscore(df)) < 3).all(axis=1)]

df.shape

df = pd.DataFrame(np.random.randn(100, 3))

from scipy import stats

df[(np.abs(stats.zscore(df)) < 3).all(axis=1)]

# count rows in DF before kicking out records with z-score over 3

df.shape

此外,当您有空闲时间时,请查看这些链接。

https://medium.com/analytics-vidhya/effect-of-outliers-on-k-means-algorithm-using-python-7ba85821ea23

https://statisticsbyjim.com/basics/outliers/

添加回答

举报