使用 TensorBoard 记录训练中的各项指标

Use The TensorBoard to keep track of your training

在前面的学习中,我们学习到了如何使用 TensorBoard 来记录 Loss 等基本的参数。那么我们可以定义更加复杂的参数指标,以至于可以自定义指标吗?答案是可以的,那么我们这节课便来学习一下如何在 TensorBoard 之中输出更加复杂的指标甚至自定义指标。

In the previous tutorial, we learned how to use the TensorBoard to record basic parameters such as Loss. So can we define more complex parameter metrics to the point where we can customize metrics? The answer is yes, so let’s take a look at how to output more complex metrics or even custom metrics in the TensorBoard.

自定义指标大致可以分为两种:

There are two broad categories of custom metrics:

- 使用回调进行自定义的输出; Custom output using callbacks;

- 在自定义循环中手动添加输出。 Manually add output in a custom loop

我们这节课来分别学习以下如何使用两者来进行自定义的输出。

In this lesson, we will learn how to use each of the following to customize the output.

1. 使用 CallBack 自定义输出

1.customizing output with CallBack

使用 CallBack 进行自定义输出的方法是采用其内置的一些 CallBack 或者一些自定义的 CallBack,而这些 CallBack 之中包含有进行指标输出的功能,故而我们可以使用他们进行自定义输出。

The way to use callbacks for custom output is to take some of the built-in callbacks or some of the custom callbacks that include the ability to do metric output, so we can use them for custom output.

使用 CallBack 进行自定义输出的大体步骤分为如下几步:

The general steps for custom output using CallBack are as follows:

- 使用 tf.summary.create_file_writer() API 来编写一个文件写入器,我们会使用该写入器来记录我们的指标; Use of TF. It’s not gonNA happen. The create () API to write a file writer that we will use to record our metrics;

- 自定义回调,并在回调的过程之中使用 tf.summary.scalar() 函数进行指标的写入; Customize the callback, and use TF. During the callback. It’s not gonNA happen. The scalar () function writes the pointer;

- 在训练的过程之中调用该 CallBack。 This CallBack is called during the course of training

在这里,我们会采用一个学习率的例子来进行演示:

Here, we’ll use a learning rate example to illustrate:

import tensorflow as tf

(x_train, y_train),(x_test, y_test) = tf.keras.datasets.mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

file_writer = tf.summary.create_file_writer("logs/2")

file_writer.set_as_default()

# 定义学习曲线,并且输出指标

def my_lr_schedule(epoch):

learning_rate = 0.1

learning_rate /= 2

tf.summary.scalar('lr', data=learning_rate, step=epoch)

return learning_rate

lr_callback = tf.keras.callbacks.LearningRateScheduler(my_lr_schedule)

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(256, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=[])

# 调用学习率回调

model.fit(

x_train,

y_train,

epochs=3,

validation_data=(x_test, y_test),

callbacks=[lr_callback],

)

以上的模型比较简单,因此我们可以通过运行如下命令来查看结果:

The above model is relatively simple, so we can see the results by running the following command:

tensorboard --logdir logs/2

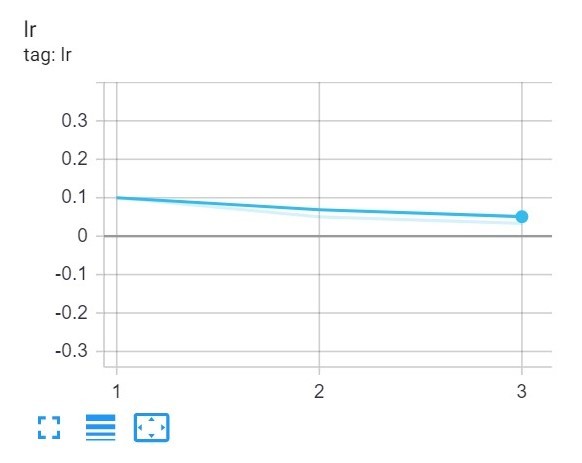

于是,我们便可以查看到我们自定义的学习率指标的曲线的变化情况:

Then we can see how the curve of our custom learning rate indicator changes:

2. 在自定义循环之中自定义输出指标

2. Customize output metrics in a custom loop

在上面的例子之中,我们发现了在调用回调的过程之中,最核心的语句是如下的指示输出语句:

In the example above, we found that the core statement during a callback call is the following directive output statement:

tf.summary.scalar()

既然如此,那么我们是否可以自己定义何时进行输出,以及输出什么内容呢?答案是肯定的,我们这一小结就会采用 tf.summary.scalar() 这一个核心 API 来进行自定义的指标的输出。

In that case, can we define for ourselves when to output and what to output? The answer is yes, and we’ll use TF. For this summary. It’s not gonNA happen. SCALAR () is the core API for custom metric output.

首先,我们仍然采用前面的模型与数据:

First, we still use the previous models and data:

import tensorflow as tf

import numpy as np

(train_images, train_labels), (test_images, test_labels) = tf.keras.datasets.mnist.load_data()

# 数据归一化

train_images = train_images / 255.0

test_images = test_images / 255.0

train_dataset = tf.data.Dataset.from_tensor_slices((train_images, train_labels))

train_dataset = train_dataset.shuffle(buffer_size=1024).batch(64)

valid_dataset = tf.data.Dataset.from_tensor_slices((test_images, test_labels))

valid_dataset = valid_dataset.batch(64)

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(256, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

然后我们就可以定义我们的文件写入器,并且指定其日志写入目录:

Then we can define our file writer and specify its log to write to the directory:

file_writer = tf.summary.create_file_writer("logs/3")

file_writer.set_as_default()

最后就到了我们的重头戏,我们可以编写自定义循环,并且在自定义循环之中定义我们要输出的指标,以及输出的频率等:

Finally, we get to the point where we can write a custom loop, and in the custom loop we define the metrics we want to output, the frequency of output, and so on:

loss_fn = tf.keras.losses.SparseCategoricalCrossentropy()

optimizer = tf.keras.optimizers.Adam()

val_acc = tf.keras.metrics.SparseCategoricalAccuracy()

epochs = 3

for epoch in range(epochs):

for batch_i, (x_batch_train, y_batch_train) in enumerate(train_dataset):

with tf.GradientTape() as tape:

outputs = model(x_batch_train, training=True)

loss_value = loss_fn(y_batch_train, outputs)

grads = tape.gradient(loss_value, model.trainable_weights)

optimizer.apply_gradients(zip(grads, model.trainable_weights))

if batch_i % 10 == 0:

# 对训练数据进行Log

tf.summary.scalar('train_loss', data=float(loss_value), step=epoch*len(train_dataset)+batch_i)

print("Loss at step %d: %.4f" % (epoch*len(train_dataset)+batch_i, float(loss_value)))

for batch_i, (x_batch_train, y_batch_train) in enumerate(valid_dataset):

# 对测试数据进行Log

outputs = model(x_batch_train, training=False)

val_acc.update_state(y_batch_train, outputs)

tf.summary.scalar('valid_acc', data=float(val_acc.result()), step=epoch)

val_acc.reset_states()

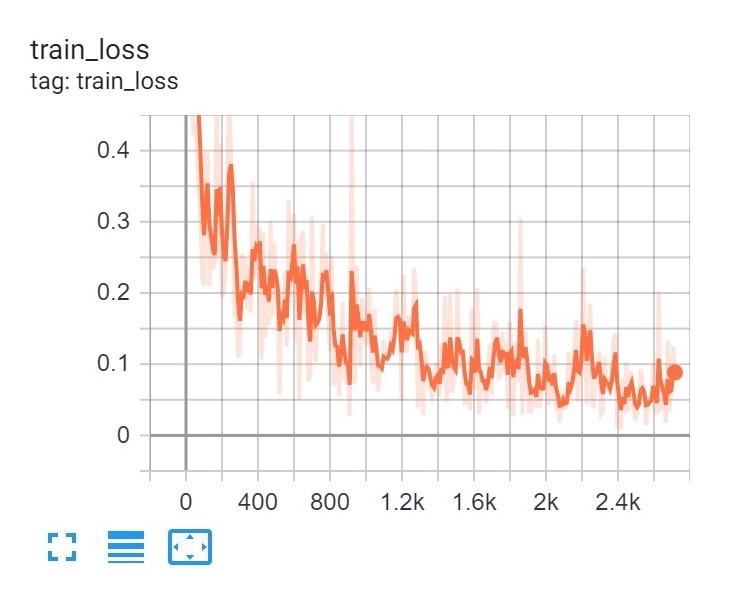

在这里,我们在训练的过程之中使用 tf.summary.scalar 这个 API 进行指标的输出;而且我们让其没 10个批次进行一次输出。

Here, we use the TF. In the training process. It’s not gonNA happen. The scalar API does the metric’s output; and we let it do it all at once in less than 10 batches.

于是我们可以得到我们在训练集上的 Loss 指标为:

So we get our Loss indicator on the training set as follows:

由此,我们完成了如何自定义进行指标输出的工作。

This completes the work of customizing the output of the metric.

3. 小结

3. Summary

在这节课之中,我们学习了如何自定义指标的输出,其中包括使用通过CallBack来在训练的过程中输出指标以及如何在自定义训练的过程中输出指标。

In this lesson, we learned how to customize the output of metrics, including using CallBack to output metrics during training and how to output metrics during custom training.

夜流歌 ·

夜流歌 ·

2024 imooc.com All Rights Reserved |

2024 imooc.com All Rights Reserved |